HTCondor

You can use HTCondor to run the nextnano software on your local computer infrastructure (“on-premise”). Essentially, nextnanomat submits the job either locally or on the “HTCondor” cluster. In both cases, the results of the calculations are located on your local computer.

This feature is only supported with our new license system.

HTCondor on nextnanomat

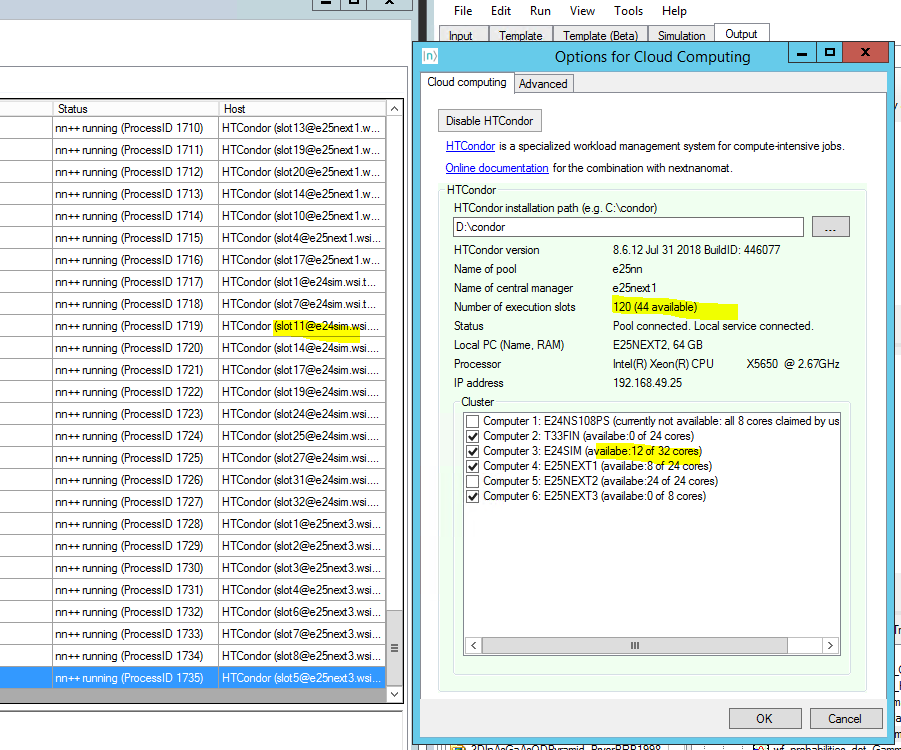

The following shows a screenshot from nextnanomat. 6 computers are connected to the

HTCondor pool called e25nn. 120 slots are configured, 44 are

currently available. Computers 2, 3, 4 and 6 are selected to accept

jobs. Computers 2 and 6 are currently not available as they are in use.

Figure 5 Screenshot taken from nextnanomat with integrated HTCondor feature.

Recommended Installation Process

Download HTCondor installer from HTCondor.

On the webpage, click on

Downloadand go toCurrent Stable ReleaseofUW Madison(as of September 24 2020, HTCondor 8.8.10).We recommend the file for Windows in

Native Packages. The filenames look similar to this one:condor-8.8.10-513586-Windows-x64.msi(Version 8.8.10)

Select the file, agree to the license agreement and download the

.msifile. When you download it, you can optionally enter your name, email address and institution and subscribe to the HTCondor newsletter.

Install HTCondor.

Start installer

Click

Nextand then accept License AgreementThen there are two options. There will be one special computer that manages all HTCondor jobs (Central Manager), and other computers as submit/execute nodes. If there is no Central Manager yet, we have to create a new pool.

If you are on the Central Manager, choose

Create a new HTCondor Pooland fill in the name of the Pool, e.g.nextnanoHTCondorPool. This is a unique name for your pool of machines.If you are not the Central Manager, choose

Join an existing HTCondor Pooland fill in the hostname of the central manager, e.g. computername wherenextnanoHTCondorPoolhas been created.

Tic

Submit jobs to HTCondorPooland chooseAlways run jobs and never suspend them.(Alternative: If you do not want other people to run jobs on your machine at all, selectDo not run jobs on this machineor if you do not want other people to run jobs on your machine while you are working, selectWhen keyboard has been idle for 15 minutes.. You can of course modify these settings later.)Fill in your domain name (Example: Your Windows domain, e.g.

yourcompanyname.com(withoutwww).) All PCs of your network should get the same domain name, this does not necessarily have to be your Windows domain.Hostname of SMTP Server and email address of administrator (not needed currently, leave it blank)

Path to Java Virtual Machine (not needed currently, leave it blank)

Host with Read access:

*Host with Write access:

$(CONDOR_HOST), $(IP_ADDRESS), *.yourdomainname.com, 192.168.178.*, (Replace *.cs.wisc.edu with your domain name and add your local IP subnet e.g. 192.168.178.*). On Windows you can find your IP subnet by opening the Command Promptcmd.exeand typing inipconfig.Host with Administrator access

*(or$(IP_ADDRESS))Enable VM Universe

NoChoose an installation directory and press next (e.g.

C:\condor\). The directoryProgram Filesis problematic due to write permissions, so we do not recommend using it.Press

Installand type in the Administrator password of your PC. (You need Administrator rights.)Once installed, please restart the computer. Then your new pool or pool member should be up and running.

A few more setups

To be able to submit jobs from nextnanomat to HTCondor, you have to store your credentials once. Open a command shell and type the following command:

condor_store_cred addEnter your password and you are ready to submit your first HTCondor job.

If this does not work, try to enter

condor_store_cred add -debugfor more output information on the error.

Please make sure that nextnanomat has successfully found the HTCondor pool. In nextnanomat go to

Tools->Options->Cloud computing. If everything is correctly set up, you will find the “HTCondor” section highlighted with green color, and the available computers show up in “Cluster”. If this is not the case, maybe you have not installed HTCondor on the computer where you are running nextnanomat. Please also check that the HTCondor installation path is correctly set within nextnanomat, e.g. the default pathC:\condormight not be the one where you installed HTCondor.

Summary of settings (Example)

Hostname (for HTCondor pool): computername.yourcompanyname.com

Policy: "Always run jobs"

Accounting domain: yourcompanyname.com

Read access: *

Write access: $(CONDOR_HOST), $(IP_ADDRESS), *.yourcompanyname.com, 192.168.178.*

Administrator: $(IP_ADDRESS)

Config file

You can find your HTCondor config settings in the file

C:\condor\condor_config. Let us look at an example below.

Your company is called

Simpson.Your Windows domain is called

simpson.com.Your HTCondor pool shall have the name

TheSimpsonsCondorPool.The HTCondor host that manages the HTCondor jobs has the computer name

homer.simpson.com.Your computer is called

lisa.simpson.com.The computers in your network have the IP range

192.168.188.*. (or2001:db8:2042::*in IPv6)

RELEASE_DIR = C:\condor

LOCAL_CONFIG_FILE = $(LOCAL_DIR)\condor_config.local

REQUIRE_LOCAL_CONFIG_FILE = FALSE

LOCAL_CONFIG_DIR = $(LOCAL_DIR)\config

use SECURITY : HOST_BASED

#CONDOR_HOST: $(FULL_HOSTNAME) # on computer called homer

CONDOR_HOST: homer # on computer called lisa

COLLECTOR_NAME = TheSimpsonsCondorPool # only on computer called homer

#UID_DOMAIN = # empty if you do not have a domain

UID_DOMAIN = simpson.com

SOFT_UID_DOMAIN=TRUE # entry is missing if you do not have a domain

FILESYSTEM_DOMAIN = simpson.com # entry is missing if you do not have a domain

CONDOR_ADMIN =

SMTP_SERVER =

ALLOW_READ = *

ALLOW_WRITE = $(CONDOR_HOST), $(IP_ADDRESS), *.simpson.com, 192.168.188.*, 2001:db8:2042::*

ALLOW_ADMINISTRATOR = $(IP_ADDRESS)

use POLICY : ALWAYS_RUN_JOBS

#use POLICY : DESKTOP

WANT_VACATE = FALSE

WANT_SUSPEND = TRUE

#DAEMON_LIST = MASTER SCHEDD COLLECTOR NEGOTIATOR STARTD # on computer called homer

#DAEMON_LIST = MASTER SCHEDD STARTD KBDD # on computer called lisa if keyboard idle 15 minutes option was chosen

DAEMON_LIST = MASTER SCHEDD STARTD # on computer called lisa

Configuring a pool without a domain

It is also possible to set up a HTCondor pool with computers that are not on a domain by using the NO_DNS option in the compig file.

This also provides an easy way to setup a mixed pool with Windows and Linux machines. Below are example comfigurations for central manager and

submit/execute nodes for Windows and Linux, respectively. The user passwords on Windows machines need to be stored by

condor_store_cred add command as above, on Linux machines no password is needed.

Central Manager on Windows:

RELEASE_DIR = C:\condor

LOCAL_DIR = $(RELEASE_DIR)

qLOCAL_CONFIG_FILE = $(LOCAL_DIR)\condor_config.local

REQUIRE_LOCAL_CONFIG_FILE = FALSE

use SECURITY : HOST_BASED

CONDOR_HOST = $(FULL_HOSTNAME)

COLLECTOR_NAME = EXAMPLE_POOL

NO_DNS = True

DEFAULT_DOMAIN_NAME = MY_DOMAIN

ALLOW_CONFIG = $(IP_ADDRESS)

ALLOW_ADMINISTRATOR = $(CONDOR_HOST), $(IP_ADDRESS), condor_pool@(UID_DOMAIN), EXAMPLE_POOL

ALLOW_READ = $(IP_ADDRESS)/8, $(CONDOR_HOST)

ALLOW_WRITE = $(IP_ADDRESS)/8, $(CONDOR_HOST)

ALLOW_NEGOTIATOR = $(CONDOR_HOST), condor_pool@$(UID_DOMAIN)

ALLOW_ADVERTISE_MASTER = $(IP_ADDRESS)/8, $(CONDOR_HOST)

ALLOW_ADVERTISE_STARTD = $(IP_ADDRESS)/8, $(CONDOR_HOST)

ALLOW_ADVERTISE_SCHEDD = $(IP_ADDRESS)/8, $(CONDOR_HOST)

ALLOW_DAEMON = condor_pool@$(UID_DOMAIN), condor@$(UID_DOMAIN), $(IP_ADDRESS)

DAEMON_LIST = MASTER STARTD SCHEDD NEGOTIATOR COLLECTOR

use POLICY : ALWAYS_RUN_JOBS

WANT_VACATE = FALSE

WANT_SUSPEND = TRUE

Submit/Execute Node on Windows:

RELEASE_DIR = C:\condor

LOCAL_DIR = $(RELEASE_DIR)

LOCAL_CONFIG_FILE = $(LOCAL_DIR)\condor_config.local

REQUIRE_LOCAL_CONFIG_FILE = FALSE

use SECURITY : HOST_BASED

CONDOR_HOST = 192.168.188.xy #substitute with the ip address of the central manager

COLLECTOR_HOST = $(CONDOR_HOST)

NO_DNS = True

DEFAULT_DOMAIN_NAME = MY_DOMAIN

ALLOW_CONFIG = $(IP_ADDRESS), $(CONDOR_HOST)

ALLOW_ADMINISTRATOR = $(CONDOR_HOST), $(IP_ADDRESS), condor_pool@(UID_DOMAIN), EXAMPLE_POOL

ALLOW_READ = $(IP_ADDRESS)/8, $(CONDOR_HOST)

ALLOW_WRITE = $(IP_ADDRESS)/8, $(CONDOR_HOST)

ALLOW_ADVERTISE_MASTER = $(IP_ADDRESS)/8, $(CONDOR_HOST)

ALLOW_ADVERTISE_STARTD = $(IP_ADDRESS)/8, $(CONDOR_HOST)

ALLOW_ADVERTISE_SCHEDD = $(IP_ADDRESS)/8, $(CONDOR_HOST)

ALLOW_DAEMON = condor_pool@$(UID_DOMAIN), condor@$(UID_DOMAIN), $(IP_ADDRESS), $(CONDOR_HOST)

DAEMON_LIST = MASTER STARTD SCHEDD

use POLICY : ALWAYS_RUN_JOBS

WANT_VACATE = FALSE

WANT_SUSPEND = TRUE

Central Manager on Linux:

RELEASE_DIR = /usr

LOCAL_DIR = /var

LOCAL_CONFIG_FILE = /etc/condor/condor_config.local

REQUIRE_LOCAL_CONFIG_FILE = false

LOCAL_CONFIG_DIR = /etc/condor/config.d

## Pathnames

RUN = $(LOCAL_DIR)/run/condor

LOG = $(LOCAL_DIR)/log/condor

LOCK = $(LOCAL_DIR)/lock/condor

SPOOL = $(LOCAL_DIR)/spool/condor

EXECUTE = $(LOCAL_DIR)/lib/condor/execute

BIN = $(RELEASE_DIR)/bin

LIB = $(RELEASE_DIR)/lib/condor

INCLUDE = $(RELEASE_DIR)/include/condor

SBIN = $(RELEASE_DIR)/sbin

LIBEXEC = $(RELEASE_DIR)/lib/condor/libexec

SHARE = $(RELEASE_DIR)/share/condor

GANGLIA_LIB64_PATH = /lib,/usr/lib,/usr/local/lib

PROCD_ADDRESS = $(RUN)/procd_pipe

use SECURITY : HOST_BASED

CONDOR_HOST = $(FULL_HOSTNAME)

COLLECTOR_NAME = EXAMPLE_POOL

NO_DNS = True

DEFAULT_DOMAIN_NAME = MY_DOMAIN

ALLOW_CONFIG = $(IP_ADDRESS)

ALLOW_ADMINISTRATOR = $(IP_ADDRESS), $(CONDOR_HOST), condor_pool@(UID_DOMAIN), EXAMPLE_POOL

ALLOW_WRITE = $(CONDOR_HOST), $(IP_ADDRESS)/8

ALLOW_READ = $(CONDOR_HOST), $(IP_ADDRESS)/8

ALLOW_NEGOTIATOR = $(CONDOR_HOST), condor_pool@$(UID_DOMAIN)

ALLOW_ADVERTISE_MASTER = $(IP_ADDRESS)/8

ALLOW_ADVERTISE_STARTD = $(IP_ADDRESS)/8

ALLOW_ADVERTISE_SCHEDD = $(IP_ADDRESS)/8

ALLOW_DAEMON = condor_pool@$(UID_DOMAIN), condor@$(UID_DOMAIN), $(IP_ADDRESS)

DAEMON_LIST = MASTER, STARTD, SCHEDD, NEGOTIATOR, COLLECTOR

# Do not phone home

CONDOR_DEVELOPERS = NONE

CONDOR_DEVELOPERS_COLLECTOR = NONE

SSH_TO_JOB_SSHD_CONFIG_TEMPLATE = /etc/condor/condor_ssh_to_job_sshd_config_template

Submit/Execute Node on Linux:

RELEASE_DIR = /usr

LOCAL_DIR = /var

LOCAL_CONFIG_FILE = /etc/condor/condor_config.local

REQUIRE_LOCAL_CONFIG_FILE = false

LOCAL_CONFIG_DIR = /etc/condor/config.d

## Pathnames

RUN = $(LOCAL_DIR)/run/condor

LOG = $(LOCAL_DIR)/log/condor

LOCK = $(LOCAL_DIR)/lock/condor

SPOOL = $(LOCAL_DIR)/spool/condor

EXECUTE = $(LOCAL_DIR)/lib/condor/execute

BIN = $(RELEASE_DIR)/bin

LIB = $(RELEASE_DIR)/lib/condor

INCLUDE = $(RELEASE_DIR)/include/condor

SBIN = $(RELEASE_DIR)/sbin

LIBEXEC = $(RELEASE_DIR)/lib/condor/libexec

SHARE = $(RELEASE_DIR)/share/condor

GANGLIA_LIB64_PATH = /lib,/usr/lib,/usr/local/lib

PROCD_ADDRESS = $(RUN)/procd_pipe

use SECURITY : HOST_BASED

CONDOR_HOST = 192.168.188.xy #subsitute with the ip address of the central manager

COLLECTOR_HOST = $(CONDOR_HOST)

NO_DNS = True

DEFAULT_DOMAIN_NAME = MY_DOMAIN

ALLOW_CONFIG = $(IP_ADDRESS)

ALLOW_ADMINISTRATOR = $(IP_ADDRESS), $(CONDOR_HOST), condor_pool@(UID_DOMAIN), EXAMPLE_POOL

ALLOW_WRITE = $(CONDOR_HOST), $(IP_ADDRESS)/8

ALLOW_READ = $(CONDOR_HOST), $(IP_ADDRESS)/8

ALLOW_ADVERTISE_MASTER = $(IP_ADDRESS)/8

ALLOW_ADVERTISE_STARTD = $(IP_ADDRESS)/8

ALLOW_ADVERTISE_SCHEDD = $(IP_ADDRESS)/8

ALLOW_DAEMON = condor_pool@$(UID_DOMAIN), condor@$(UID_DOMAIN), $(IP_ADDRESS)

DAEMON_LIST = MASTER, STARTD, SCHEDD

# Do not phone home

CONDOR_DEVELOPERS = NONE

CONDOR_DEVELOPERS_COLLECTOR = NONE

SSH_TO_JOB_SSHD_CONFIG_TEMPLATE = /etc/condor/condor_ssh_to_job_sshd_config_template

Submitting jobs to HTCondor pool with nextnanomat

Submit job

Add a job to the Batch list in the Run tab.

Click on the Run in HTCondor Cluster button (button with triangle and network).

Show information on HTCondor cluster

Click on Show Additional Info for Cluster Simulation.

Press the Refresh button on the right.

The results of the

condor_statuscommand are shown, i.e. the number of compute slots are displayed.You can select another HTCondor command such as

condor_qto show the status of your submitted jobs, i.e. selectcondor_q, and then press the Refresh button.

You can type in any command in the line System command:, e.g.

dir.The button Open Documentation opens the online documentation (this website).

Results of HTCondor simulations

Once your HTCondor jobs are finished, the results are automatically copied back to your simulation output folder

<nextnano simulation output folder\<name of input file>\.For debugging purposes regarding the HTCondor job, you can analyze the generated log file,

<input file name>.log.

Useful HTCondor commands for the Command Prompt

condor_submit <filename>.subSubmit a job to the pool.condor_qShows current state of own jobs in the queue.condor_q -nobatch -global -allusersShows state of all jobs in the cluster. Of all users.condor_q -goodput -global -allusersShows state and occupied CPU of all jobs in the cluster.condor_q -allusers -global -analyzeDetailed information for every job in the cluster.condor_q -global -allusers -holdShows why jobs are in hold state.

condor_statusShows state of all available resources.condor_status -longShows state of all available resources and many other information.condor_status -debugShows state of all available resources and some additional information, e.g. WARNING: Saw slow DNS query, which may impact entire system: getaddrinfo(<Computername>) took 11.083566 seconds.condor_rmRemove jobs from a queue:condor_rm -allRemoves all jobs from a queue.condor_rm <cluster>.<id>Removes jobs on cluster <cluster> with id <id> (It seems<cluster>.can be omitted, andidis theJOB_IDSnumber.)

condor_release -allIf any jobs are in state hold, use this command to restart them.condor_restartRestart all HTCondor daemons/services after changes in config file. It might also resolve problems when no computer is found in the network.condor_versionReturns the version number of HTCondorcondor_store_cred queryReturns info about the credentials stored for HTCondor jobscondor_historyLists the recently submitted jobs. If for a specific jobIDthe status has the valueST=C, then this job has been completed (C) successfully.condor_status -master: returns Name, HTCondor Version, CPU and Memory of central managerOpen Command Prompt

cmd.exeas Administrator. Type in:net start condor. This has the same effect as restarting your computer, i.e. the networking servicecondoris started. This is useful if you have changed your localcondor_configfile.

HTCondor Pool - Managing Slots

Each PC runs one

condor_startddaemon. By default, thecondor_startdwill automatically divide the machine into slots, placing one core in each slot. E.g. a 6-core computer with hyperthreading has 12 logical processors. Alternatively, the number of cores (or logical processors) can be distributed to the slots as follows.

SLOT_TYPE_1 = cpus=4

SLOT_TYPE_2 = cpus=4

SLOT_TYPE_3 = cpus=2

SLOT_TYPE_4 = cpus=1

SLOT_TYPE_5 = cpus=1

SLOT_TYPE_1_PARTITIONABLE = TRUE

SLOT_TYPE_2_PARTITIONABLE = TRUE

SLOT_TYPE_3_PARTITIONABLE = TRUE

SLOT_TYPE_4_PARTITIONABLE = TRUE

SLOT_TYPE_5_PARTITIONABLE = TRUE

NUM_SLOTS_TYPE_1 = 1

NUM_SLOTS_TYPE_2 = 1

NUM_SLOTS_TYPE_3 = 1

NUM_SLOTS_TYPE_4 = 1

NUM_SLOTS_TYPE_5 = 1

PartitionableSlot: For SMP (symmetric multiprocessing) machines, a boolean value identifying that this slot may be partitioned.DynamicSlot: For SMP machines that allow dynamic partitioning of a slot, this boolean value identifies that this dynamic slot may be partitioned.SlotID: For SMP machines, the integer that identifies the slot.A useful command might be:

condor_status -af Name TotalCpus DynamicSlot PartitionableSlot SlotID. It returns the requested properties of each slot:NameTotalCpusDynamicSlotPartitionableSlotSlotID

Dynamic slots

In our pool we have chosen dynamic partitioning which gives full flexibility. For instance, a quad-core CPU that is dynamically partitioned can accept

4 single-threaded jobs (

request_cpus = 1)2 jobs with 2 threads each (

request_cpus = 2)2 jobs of which one is single-threaded (

request_cpus = 1) and the other uses 3 threads (request_cpus = 3)1 job with 4 threads (

request_cpus = 4).

####################################################

# Dynamic partitioning

# We use HTCondors dynamic partitioning mechanism.

# Each PC has one partitionable whole machine slot.

# (It seems that hyperthreading is not taken into account.)

####################################################

NUM_SLOTS = 1

NUM_SLOTS_TYPE_1 = 1

SLOT_TYPE_1 = 100%

SLOT_TYPE_1_PARTITIONABLE = true

SlotWeight = Cpus

Machine states

A machine is in any of the following 6 states. The most important one

are Owner, Unclaimed, Claimed.

Backfill: (not relevant for us)Owner: The machine is being used by the machine owner, and/or is not available to run HTCondor jobs. When the machine first starts up, it begins in this state.Unclaimed: The machine is available to run HTCondor jobs, but it is not currently doing so.Matched: The machine is available to run jobs, and it has been matched by the negotiator with a specific schedd. That schedd just has not yet claimed this machine. In this state, the machine is unavailable for further matches.Claimed: The machine has been claimed by a schedd.Preempting: The machine was claimed by a schedd, but is now preempting that claim for one of the following reasons.

- The owner of the machine came back

- Another user with higher priority has jobs waiting to run.

- Another request that this resource would rather serve was found.

Machine activities

Each machine state can have different activities. The machine state

Claimed can have one out of these four activities.

Idle:Busy:Suspended:Retiring:

Configuration options for the Central Manager computer

With this option in the condor.config file on the central manager,

one can set a policy that the jobs are spread out over several machines

rather than filling all slots of one computer before filling the slots

of the other computers.

##------nn: SPREAD JOBS BREADTH-FIRST OVER SERVERS

##-- Jobs are "spread out" as much as possible,

## so that each machine is running the fewest number of jobs.

NEGOTIATOR_PRE_JOB_RANK = isUndefined(RemoteOwner) * (- SlotId)

FAQ

Q: I submitted a job to HTCondor, but nothing happens. The nextnanomat GUI says “transmitted”.

A: It could be that nextnanomat has not read in all required

settings. You can try the command condor_restart.

Please make sure that you entered your credentials using

condor_store_cred add -debug. You should then restart nextnanomat.

Q: I submitted a job to HTCondor, but the Batch line of nextnanomat

is stuck with preparing. What is wrong?

A1: Did you store your credentials after the installation of

HTCondor? If not, enter condor_store_cred add into the command

prompt to add your password, see above (Recommended Installation

Process).

A2: Did you change your password recently? If yes you have to

reenter your credentials for HTCondor. Enter condor_store_cred add

into the command prompt to add your password, see above (Recommended

Installation Process). If this does not work, try to enter

condor_store_cred add -debug for more output information on the

error.

Q: I specified target machines in Tools - Options. Afterwards every

submitted job to HTCondor is stuck with transmitting. What is wrong?

A: The value for UID_DOMAIN within the condor_config file needs

to be the same for every computer of your cluster. (You can easily test

it in a command prompt with condor_status -af uiddomain) If it’s not

the same value, no matching computer will be found and the job will not be

transmitted successfully.

Problems with HTCondor

Error: communication error

If you receive the following error when you type in condor_status

C:\Users\"<your user name>">condor_status

Error: communication error

CEDAR:6001:Failed to connect to <123.456.789.123>

you can check whether the computer associated with this IP address is your HTCondor computer using the following command.

nslookup 123.456.789.123

It is also a good idea to type in

nslookup

This will return the name of the Default Server that resolves DNS names.

If it is not the expected computer, you can open a Command Prompt as

Administrator and type in ipconfig /flushdns to flush the DNS

Resolver Cache.

C:\Users\"<your user name>">ipconfig /flushdns

If the DNS address cannot be resolved correctly it could be related to a VPN connection that has configured a different default server for Domain Name to IP address mapping. E.g. if your Windows Domain is called contoso.com (which is only visible within your own network and your own HTCondor pool) but your DNS is resolved to www.contoso.com (which might be outside your local HTCondor pool).

Error: condor_store_cred add failed with Operation failed. Make sure your ALLOW_WRITE setting include this host.

Solution: Edit condor_config file and add host, i.e. local computer

name (here: nn-delta).

ALLOW_WRITE = $(CONDOR_HOST), $(IP_ADDRESS)

==> ALLOW_WRITE = $(CONDOR_HOST), $(IP_ADDRESS), nn-delta

Error? Check the Log files

If you encounter any strange errors, you can find some hints in the history or Log files generated by HTCondor. You can find them here:

C:\condor\spool

history

C:\condor\log

CollectorLog

MasterLog

MatchLog

NegotiatorLog

ProcLog

SchedLog

ShadowLog

SharedPortLog

StarterLog

StartLog

More details can be found here: Logging in HTCondor

Known bugs

HTCondor < 8.9.5 works with all nextnano executables

HTCondor >= 8.9.5 works with nextnano executables newer than 2020-Jan

Run your custom executable on HTCondor with nextnanomat

You can even run your own executable with nextnanomat locally or on HTCondor! We tested the following programs:

HelloWorld.exeQuantum ESPRESSO (

pw.exe)ABINIT (

abinit.exe)

Input file identifier

An input file identifier is a special string in the input file that signals to nextnanomat whether the input file is an input file for the nextnano++, nextnano³, nextnano.NEGF or nextnano.MSB tools, or for a custom executable.

Settings for Hello World (HW)

In nextnanomat, we need the following settings:

Path to executable file: e.g.

D:\HW\HelloWorld.exeInput file identifier: e.g.

HelloWorldWorking directory: Select ‘Simulation output folder’

HTCondor: Output folder and files (transfer_output_files = …):

.

Open input file input_file_for_HelloWorld.in (or any other input

file that contains the string HelloWorld) and run the simulation

either locally or on HTCondor.

Settings for Quantum ESPRESSO (QE)

Our folder structure is

D:\QE\inputfile\My_QE_inputfile.in(QE input file)D:\QE\input\pseudo\C.UPF(pseudopotential file for atom species ‘C’ as specified in input file)D:\QE\exe\pw.exe(QE executable file)D:\QE\exe\*.dll(all dll files needed by pw.exe)D:\QE\working_directory\QE_nextnanomat_HTCondor.bat(batch file)

In nextnanomat, we need the following settings:

Path to executable file: e.g.

D:\QE\working_directory\QE_nextnanomat_HTCondor.batPath to folder with additional files:

D:\QE\Input file identifier: e.g.

&controlWorking directory: Select ‘Simulation output folder’

HTCondor: Output folder and files (transfer_output_files = …):

.(Additional arguments passed to the executable:

$INPUTFILE)

The batch file (*.bat) contains the following content:

.\exe\pw.exe -in .\inputfile\My_QE_inputfile.in

This means that relative to the working directory, pw.exe is

started, and the specified input file is read in. In this input file,

the following quantities are specified:

C.UPF: name of pseudopotential file./input/pseudo/: path to pseudopotential fileC.UPF

Open input file My_QE_inputfile.in and run the simulation either

locally or on HTCondor.

Things that could be improved:

Write all files into output folder created by nextnanomat. In particular, the folder output/ should be moved.

condor_exec.exeis deleted (better: do not copy it back)all

*.dllfiles should be deleted (better: do not copy them back)Do not copy back *.exe and *.dll files (both HTCondor and local)

Settings for ABINIT

Our folder structure is

D:\abinit\inputfile\t30.in(ABINIT input file)D:\abinit\input\*(input files needed by ABINIT)D:\abinit\exe\abinit.exe(ABINIT executable file)D:\abinit\exe\*.dll(all dll files needed by abinit.exe)D:\abinit\working_directory\abinit_nextnanomat.bat(batch file)Path to executable file: e.g.

D:\abinit\working_directory\abinit_nextnanomat.batPath to folder with additional files:

D:\abinit\Input file identifier: e.g.

acellWorking directory: Select ‘Simulation output folder’

HTCondor: Output folder and files (transfer_output_files = …):

.Additional arguments passed to the executable: (empty)

The batch file (*.bat) contains the following content:

.\exe\abinit.exe < .\input\ab_nextnanomat_HTCondor.files

This means that relative to the working directory, abinit.exe is

started, and the specified input file is read in. In this input file,

the following quantities are specified:

.\inputfile\t30.in: name of input file.\input\14si.pspnc:

Open input file t30.in and run the simulation either locally or on

HTCondor.

Notes

condor_exec.exeis deleted (better: do not copy it back)all

*.dllfiles should be deleted (better: do not copy them back)Do not copy back *.exe and *.dll files (both HTCondor and local)

Last update: 08/01/2025